I would recommend you read the testimony of Mr. Tristan Harris before the Senate Commerce sub-committee today (June 25, 2019). Harris is the Co-Founder and Executive Director for the Center for Humane Technology.

It should be available here:

https://www.commerce.senate.gov/public/index.cfm/hearings?ID=E4F108BD-47FA-4857-B8F4-4AE745C7A119

Here is a direct link to just his testimony in PDF form: http://bit.ly/tristanharristestimony

Just in case that link doesn’t work, I copied and pasted his testimony below, but it is missing a graph. So I added the graph at the top.

HERE IS HIS TESTIMONY:

“Good morning.

I want to argue today that persuasive technology is a massively underestimated and powerful force shaping the world and that it has taken control of the pen of human history and will driveus to catastrophe if we don’t take it back. Because technology shapes where 2 billion people place their attention on a daily basis shaping what we believe is true, our relationships, oursocial comparison and the development of children. I’m excited to be here with you because youare actually in a position to change this.

Let’s talk about how we got here. While we often worried about the point at which technology’sasymmetric power would overwhelm human strengths and take our jobs, we missed this earlier point when technology hacks human weaknesses. And that’s all it takes to gain control. That’swhat persuasive technology does. I first learned this lesson as a magician as a kid, because inmagic, you don’t have to know more than your audience’s intelligence – their PhD in astrophysics – you just have to know their weaknesses.

Later in college, I studied at the Stanford Persuasive Technology Lab with the founders ofInstagram, and learned about the ways technology can influence people’s attitudes, beliefs andbehaviors.

At Google, I was a design ethicist where I thought about how do you ethically wield thisinfluence over 2 billion people’s thoughts. Because in an attention economy, there’s only somuch attention and the advertising business model always wants more. So, it becomes a race to the bottom of the brain stem. Each time technology companies go lower into the brain stem, it takes a little more control of society. It starts small. First to get your attention, I add slot machine“pull to refresh” rewards which create little addictions. I remove stopping cues for “infinite scroll” so your mind forgets when to do something else. But then that’s not enough. As attention getsmore competitive, we have to crawl deeper down the brainstem to your identity and get you addicted to getting attention from other people. By adding the number of followers and likes, technology hacks our social validation and now people are obsessed with the constant feedback they get from others. This helped fuel a mental health crisis for teenagers. And the next step of the attention economy is to compete on algorithms. Instead of splitting the atom, it splits our nervous system by calculating the perfect thing that will keep us there longer– the perfect YouTube video to autoplay or news feed post to show next. Now technology analyzes everything we’ve done to create an avatar, voodoo doll simulations of us. With more than a billion hours watched daily, it takes control of what we believe, while discriminating against our civility, our shared truth, and our calm.

As this progression continues the asymmetry only grows until you get deep fakes which are checkmate on the limits of the human mind and the basis of our trust.

But, all these problems are connected because they represent a growing asymmetry between the power of technology and human weaknesses, that’s taking control of more and more ofsociety.

The harms that emerge are not separate. They are part of an interconnected system ofcompounding harms that we call “human downgrading”. How can we solve the world’s mosturgent problems if we’ve downgraded our attention spans, downgraded our capacity forcomplexity and nuance, downgraded our shared truth, downgraded our beliefs into conspiracytheory thinking that we can’t construct shared agendas to solve our problems? This is

destroying our sensemaking at a time we need it the most. And the reason why I’m here is because every day it’s incentivized to get worse.

We have to name the cause which is an increasing asymmetry between the power of technology and the limits of human nature. So far, technology companies have attempted topretend they are in a relationship of equals with us when it’s actually been asymmetric.Technology companies have said that they are neutral, and users have equal power in therelationship with users. But it’s much closer to the power that the therapist, a lawyer or a priest has since they have massively superior compromising and sensitive information about what will influence user behavior, so we have to apply fiduciary law. Unlike a doctor or a lawyer, these platforms have the truth, the whole truth and nothing but the truth about us, and they canincreasingly predict invisible facts about us that you couldn’t get otherwise. And with theeextractive business model of advertising, they are forced to use this asymmetry to profit in ways that we know cause harm.

The key in this is to move the business model to be responsible. With asymmetric power, theyhave to have asymmetric responsibility. And that’s the key to preventing future catastrophesfrom technology that out-predicts human nature.

Government’s job is to protect citizens. I tried to change Google from the inside, but I found that it’s only been through external pressure – from government policymakers, shareholders and media – that has changed companies’ behavior.

Government is necessary because human downgrading changes our global competitiveness with other countries, especially with China. Downgrading public health, sensemaking and critical thinking while they do not would disable our long-term capacity on the world stage.

Software is eating the world, which Netscape founder Marc Andreesen said, but it hasn’t been made responsible for protecting the society that it eats. Facebook “eats” election advertising,while taking away protections for equal price campaign ads. YouTube “eats” children’sdevelopment while taking away the protections of Saturday morning cartoons.

50 years ago, Mr. Rogers testified before this committee about his concern for the race to the bottom in television that rewarded mindless violence. YouTube, TikTok, Instagram can be far worse, impacting exponentially greater number of children with more alarming material. And intoday’s world, Mr. Rogers wouldn’t have a chance. But in his hearing 50 years ago, thecommittee made a decision that permanently changed the course of children’s television for the better. I’m hoping that a similar choice can be made today.

Thank you.

PERSUASIVE TECHNOLOGY & OPTIMIZING FOR ENGAGEMENT

Tristan Harris, Center for Humane Technology

Thanks to Yael Eisenstat, Roger McNamee for contributions.

INTRODUCTION TO WHO & WHY

I tried to change Google from the inside as a design ethicist after they bought my company in2011, but I failed because companies don’t have the right incentive to change. I’ve found that itis only pressure from outside – from policymakers like you, shareholders, the media, and advertisers -- that can create the conditions for real change to happen.

WHO I AM: PERSUASION & MAGIC

Persuasion is about an asymmetry of power.

I first learned this as a magician as a kid. I learned that the human mind is highly vulnerable toinfluence. Magicians say “pick any card.” You feel that you’ve made a “free” choice, but themagician has actually influenced the outcome upstream because they have asymmetric knowledge about how your mind works.

In college, I studied at the Stanford Persuasive Technology Lab understanding how technologycould persuade people’s attitudes, beliefs and behaviors. We studied clicker training for dogs,habit formation, and social influence. I was project partners with one of the founders ofInstagram and we prototyped a persuasive app that would alleviate depression called “Send the Sunshine”. Both magic and persuasive technology represent an asymmetry in power– anincreasing ability to influence other people’s behavior.

SCALE OF PLATFORMS AND RACE FOR ATTENTION

Today, tech platforms have more influence over our daily thoughts and actions than most governments. 2.3 billion people use Facebook, which is a psychological footprint about the size of Christianity. 1.9 billion people use YouTube, a larger footprint than Islam and Judaismcombined. And that influence isn’t neutral.

The advertising business model links their profit to how much attention they capture, creating a“race to the bottom of the brain stem” to extract attention by hacking lower into our lizard brains–into dopamine, fear, outrage – to win.

HOW TECH HACKED OUR WEAKNESSES

It starts by getting our attention. Techniques like “pull to refresh” act like a slot machine to keep us “playing” even when nothing’s there. “Infinite scroll” takes away stopping cues and breaks so users don’t realize when to stop. You can try having self-control, but there are a thousand engineers are on the other side of the screen working against you.

Then design evolved to get people addicted to getting attention from other people. Features like“Follow” and “Like” drove people to independently grow their audience with drip-by-drip social validation, fueling social comparison and the rise of “influencer” culture: suddenly everyonecares about being famous.

The race went deeper into persuading our identity: Photo-sharing apps that include“beautification filters” that alter our self-image work better at capturing attention than apps thatdon’t. This fueled “Body Dysmorphic Disorder,” anchoring the self-image of millions of teenagers to unrealistic versions of themselves, reinforced with constant social feedback that people only like you if you look different than you actually do. 55% of plastic surgeons in a 2018 survey saidthey’d seen patients whose primary motivation was to look better in selfies, up from just 13% in2016. Instead of companies competing for attention, now each person competes for attention using a handful of tech platforms.

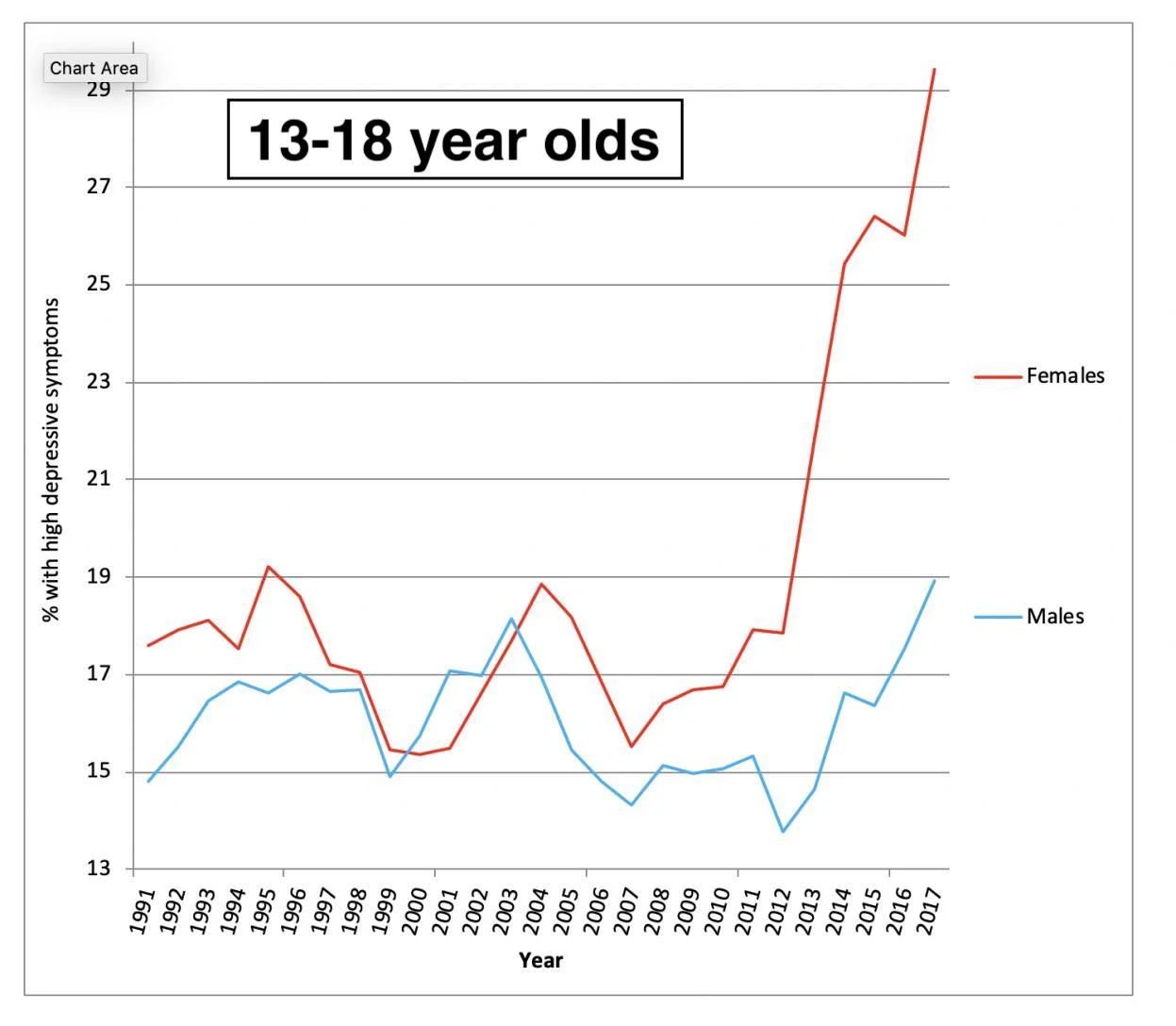

Constant visibility to others fueled mass social anxiety and a mental health crisis. It’s impossibleto disconnect when you fear your social reputation could be ruined by the time you get home. After nearly two decades in decline, “high depressive symptoms” for 13-18 year old teen girls suddenly rose 170% between 2010 - 2017. Meanwhile, most people aren’t aware of the growingasymmetry between persuasive technology and human weaknesses.

USING AI TO EXTRACT ATTENTION, ROLE OF ALGORITHMS

The arms race for attention then moved to algorithms and A.I.: companies compete on whose algorithms more accurately predict what will keep users there the longest.

For example, you hit ‘play’ on a YouTube video and think, “I know those other times I getsucked into YouTube, but this time it will be different.” Two hours later you wake up from a trance and think “I can’t believe I did that again.” Saying we should have more self control hidesan invisible asymmetry in power: YouTube has a supercomputer pointed at your brain.

When you hit play, YouTube wakes up an avatar, voodoo doll-like model of you. All of your video clicks, likes and views are like the hair clippings and toenail filings that make your voodoo doll look and act more like you so it can more accurately predict your behavior. YouTube then‘pricks’ the avatar with millions of videos to simulate and make predictions about which ones will keep you watching. It’s like playing chess against Garry Kasparov, you’re going to lose. YouTube’s machines are playing too many moves ahead.

That’s exactly what happened: 70% of YouTube’s traffic is now driven by recommendations, “because of what our recommendation engines are putting in front of you,” said Neal Mohan,CPO of YouTube. With over a billion hours watched daily, algorithms have already taken controlof two billion people’s thoughts.

TILTING THE ANT COLONY TOWARDS CRAZYTOWN

Imagine a spectrum of videos on YouTube, from the “calm” side-- rational, science-based, long,Walter Cronkite section, to the side of “crazytown”

Because YouTube wants to maximize watch time, it tilts the entire ant colony of humanitytowards crazytown. It’s “algorithmic extremism”:

Teen girls that played “diet” videos on YouTube were recommended anorexia videos.

AlgoTransparency.org revealed that the most frequent keywords in recommended

YouTube videos were: get schooled, shreds, debunks, dismantles, debates, rips

confronts, destroys, hates, demolishes, obliterates.

Watching a NASA Moon landing videos YouTube recommended “Flat Earth”

conspiracies, recommended hundreds of millions of times before being downranked.

YouTube recommended Alex Jones InfoWars videos 15 billion times – more than the

combined traffic of NYTimes, Guardian, Washington Post and Fox News.

More than 50% of fascist activists in a Bellingcat study credit the Internet with their red-

pilling. YouTube was the single most frequently discussed website.

When the Mueller report was released about Russian interference in the 2016 election,

RussiaToday’s coverage was the most recommended of 1,000+ monitored channels.

Adults watching sexual content were recommended videos that increasingly feature

young women, then girls to then children playing in bathing suits (NYT article)

Fake news spreads six times faster than real news, because it's free to evolve to confirm

existing beliefs unlike real news, which is constrained by the limits of what is true (MIT Twitter study)

Freedom of speech is not the same as freedom of reach. Everyone has a right to speak, but not a right to a megaphone that reaches billions of people. Social platforms amplify salacious

speech without upholding any of the standards and practices required for traditional media and broadcasters. If you derived a motto from technology platforms from their observed behavior, it would be, “with great power comes no responsibility.”

They are debasing the information environment that powers our democracy. Beyond discriminating against any party, tech platforms are discriminating against the values that make democracy work: discriminating against civility, thoughtfulness, nuance and open-mindedness.

EQUAL, OR ASYMMETRIC?

Once you see the extent to which technology has taken control, we have to ask, is the nature of the business relationship between platforms and users one that is contractual, a relationship between parties of equal power, or is it asymmetric?

There has been a misunderstanding about the nature of the business relationship between the platform and the user, that they have asserted that it is a contractual relationship of parties with equal power. In fact, it is much closer to the relationship of a therapist, lawyer, priest. They have superior information, such an asymmetry of power, that you have to apply fiduciary law.

Saying “we give people what they want” or “we’re a neutral platform” hides a dangerousasymmetry: Google and Facebook hold levels of compromising information on two billion users that vastly exceed that of a psychotherapist, lawyer, or priest, while being able to extract benefit towards their own goals of maximizing certain behaviors.

THE ASYMMETRY WILL ONLY GET EXPONENTIALLY WORSE

The reason we need to apply fiduciary law now is because the situation is only going to get worse. A.I. will make technology exponentially more capable of predicting what will manipulate humans, not less.

There’s a popular conspiracy theory that Facebook listens to your microphone, because thething you were just talking about with your friend just showed up in your news feed. Butforensics show they don’t listen. More creepy: they don’t have to, because they can wake upone of their 2.3 billion avatar, voodoo dolls of you to accurately predict the conversations you’remost likely to have.

This will only get worse.

Already, platforms are easily able to:

Predict whether you are lonely or suffer from low self-esteem

Predict your big 5 personality traits with your temporal usage patterns alone

Predict when you’re about to get into a relationship

Predict your sexuality before you know it yourself

Predict which videos will keep you watching

Put together, Facebook or Google are like a priest in a confession booth who listens to twobillion people’s confessions, but whose only business model is to shape and control what thosetwo billion people do while being paid by a 3rd party. Worse, the priest has a supercomputercalculating patterns between two billion people’s confessions, so they can predict what

confessions you’re going to make, before you know you’re going to make them – and sell access to the confession booth.

Technology, unchecked, will only be able to better predict what will influence our behavior, not less.

There are two ways to take control of human behavior – 1) you can build more advanced A.I. toaccurately predict what will manipulate someone’s actions, 2) you can simplify humans by making them more predictable and reactive. Today, technology is doing both: profits within Google and Facebook get reinvested into better predictive models and machine learning to manipulate behavior, while simultaneously simplifying humans to respond to simpler and simpler stimuli. This is checkmate humanity.

THE HARMS ARE A SELF-REINFORCING SYSTEM

We often consider problems in technology as separate – addiction, distraction, fake news, polarization and teen suicides and mental health. They are not separate. They are part of an interconnected system of harms that are a direct consequence of a race to the bottom of the brain stem to extract attention.

Shortening attention spans, breakdown our shared truth, increase polarization, rewarding outrage, depressed critical thinking, increase loneliness and social isolation, increasing teen suicideandself-harm-especiallyamonggirls,risingextremism,andconspiracythinking –and ultimately debase the information environment and social fabric we depend on.

These harms reinforce each other. When it shrinks our attention spans, we can only say simpler, 140 character messages about increasingly complex problems – driving polarization: half of people might agree with the simple call to action, but will automatically enrage the rest. NYU psychology researchers found that each word of moral outrage added to a tweet raises the retweet rate by 17%. Reinforcing outrage compounds mob mentality, where people become increasingly angry about things happening at increasing distances.

This leads to “callout culture” that angry mobs trolling and yelling at each other for the leastcharitable interpretation of simpler and simpler message. Misinterpreted statements lead to more defensiveness. This leads to more victimization, more baseline anger and polarization,and less social trust. “Callout culture” creates a chilling effect, and crowds out inclusive thinkingthat reflects the complex world we live in and our ability to construct shared agendas of action. More isolation also means more vulnerability to conspiracies.

As attention starts running out, companies have to “frack” for attention by splitting our attentioninto multiple streams – multi-tasking three or four simultaneous things at once. They might quadruple the size of the attention economy, but downgraded our attention spans. The average time we focus drops. Productivity drops.

NAMING THE INTERCONNECTED SYSTEM OF HARMS

These effects are interconnected and mutually reinforcing. Conservative pollster Frank Luntzcalls it the “the climate change of culture.” We at the Center for Humane Technology call it “human downgrading”:

While tech has been upgrading the machines, they’ve been downgrading humans -

- downgrading attention spans, civility, mental health, children, productivity, critical thinking, relationships, and democracy.

IT AFFECTS EVERYONE

Even if you don’t use these platforms, it still affects you. You still live in a country where other people vote based on what they are recommended. You still send your kids to schools withother parents who believe anti-vaxx conspiracies recommended to them on social media. Measles cases increased 30% between 2016 and 17 and leading WHO to call ‘vaccine hesitancy’ a top 10 global health threat.

We’re all in the boat together. Human downgrading is like a dark cloud descending upon societythat affects everyone.

COMPETITION WITH CHINA

But human downgrading matters for global competition. Competing with China, whichevernation least downgrades its populations’ attention spans, critical thinking, mental health, andpolitical polarization will win be more productive, healthy and fast-moving on the global stage.

CONCLUSION

Government’s job is to protect citizens. All of this, I genuinely believe, can be fixed with changesin incentives that match the scope of the problem.

I am not against technology. The genie is out of the bottle. But we need a renaissance of“humane technology” that is designed to protect and care for human wellbeing and the social fabric upon which these technologies are built. We cannot rely on the companies alone to make that change. We need our government to create the rules and guardrails that market forces to create competition for technology that strengthens society and human empowerment, and protects us from these harms.

Netscape founder Marc Andreesen said in 2011, “software is eating the world” because it willinevitably operate aspects of society more efficiently than without technology: taxis, election advertising, content generation, etc.

But technology shouldn’t take over our social institutions and spaces, without takingresponsibility for protecting them:

Technology “ate” election campaigns with Facebook, while taking away FEC protectionslike equal price campaign ads.

Tech “ate” the playing field for global information war, while replacing the protections ofNATO and the Pentagon with a small teams at Facebook, Google or Twitter.

Technology “ate” our dopamine centers of our brains -- without the protection of an FDA.

Technology “ate” children’s development with YouTube, while taking away the

protections of Saturday morning cartoons.

Exactly 50 years ago, children’s TV show host Fred “Mister” Rogers testified to this committeeabout his concern for how the race to the bottom in TV rewarded mindless violence and harmedchildren’s development. Today's world of YouTube and TikTok are far worse, impactingexponentially greater number of children with far more alarming material. Today Mister Rogerswouldn’t have a chance.

But on the day Rogers testified, Senators chose to act and funded a caring vision for children in public television. It was a decision that permanently changed the course of children’s televisionfor the better. Today I hope you choose protecting citizens and the world order – by incentivizinga caring and “humane” tech economy that strengthens and protects society instead of beingdestructive.

The consequences of our actions as a civilization are more important than they have ever been,while technology that informs these decisions are being downgraded. If we’re disablingourselves from making good choices, that's an existential outcome.

Thank you.

---

VIDEO: HUMANE: A NEW AGENDA FOR TECH

You can view a video presentation of most of this material at:

https://humanetech.com/newagenda/

Technology is Downgrading Humanity; Let’s Reverse That Trend Now

Summary: Today’s tech platforms are caught in a race to the bottom of the brain stem to extracthuman attention. It's a race we're all losing. The result: addiction, social isolation, outrage, misinformation, and political polarization are all part of one interconnected system, called human downgrading, that poses an existential threat to humanity. The Center for Humane Technology believes that we can reverse that threat by redesigning tech to better protect the vulnerabilities of human nature and support the social fabric.

THE PROBLEM: Human Downgrading

What's the underlying problem with technology’s impact on society?

We’re surrounded by a growing cacophony of grievances and scandals. Tech addiction,outrage-ification of politics, election manipulation, teen depression, polarization, the breakdown of truth, and the rise of vanity/micro-celebrity culture. If we continue to complain about separate issues, nothing will change. The truth is, these are not separate issues. They are an interconnected systems of harms we call human downgrading.

The race for our attention is the underlying cause of human downgrading. More than two billion people -- a psychological footprint bigger than Christianity -- are jacked into social platforms designed with the goal of not just getting our attention, but getting us addicted to getting attention from others. This an extractive attention economy. Algorithms recommend increasingly extreme, outrageous topics to keep us glued to tech sites fed by advertising. Technology continues to tilt us toward outrage. It’s a race to the bottom of the brainstem that's downgrading humanity.

By exploiting human weaknesses, tech is taking control of society and human history. Asmagicians know, to manipulate someone, you don’t have to overwhelm their strengths, you just have to overwhelm their weaknesses. While futurists were looking out for the moment when technology would surpass human strengths and steal our jobs, we missed the much earlier point wheretechnology surpasses human weaknesses. It’s already happened. By preying on human weaknesses -- fear, outrage, vanity -- technology has been downgrading our well-being, while upgrading machines.

Consider these examples:

Extremism exploits our brains: With over a billion hours on YouTube watched daily, 70% of those billion hours are from the recommendation system. The most recommended keywords in recommended videos were get schooled, shreds, debunks, dismantles, debates, rips confronts, destroys, hates, demolishes, obliterates.

Outrage exploits our brains: For each moral-emotional word added to a tweet it raised its retweet rate by 17% (PNAS).

Insecurity exploits our brains: In 2018, if you were a teen girl starting on a dieting video,YouTube’s algorithm recommended anorexia videos next because those were better atkeeping attention.

Conspiracies exploit our brains: And if you are watching a NASA moon landing, YouTube would recommend Flat Earth conspiracies millions of time. YouTube recommended Alex Jones (InfoWars) conspiracies 15 billion times (source).

Sexuality exploits our brains: Adults watching sexual content were recommended videos that increasingly feature young women, then girls to then children playing in bathing suits (NYT article)

Confirmation bias exploits our brains: Fake news spreads six times faster than real news, because it's unconstrained while real news is constrained by the limits of what is true (MIT Twitter study)

Why did this happen in the first place? Because of the advertising business model.

Free is the most expensive business model we've ever created. We're getting “free” destruction of our shared truth, “free” outrage-ification of politics, “free” social isolation, “free” downgrading ofcritical thinking. Instead of paying professional journalists, the “free” advertising model incentivizes platforms to extract “free labor” from users by addicting them to getting attention from others and togenerate content for free. Instead of paying human editors to choose what gets published to whom,it’s cheaper to use automated algorithms that match salacious content to responsive audiences -- replacing news rooms with amoral server farms.

This has debased trust and the entire information ecology.

Social media has created an uncontrollable digital Frankenstein. Tech platforms can’t scalesafeguards to these rising challenges across the globe, more than 100 languages, in millions of FB groups or YouTube channels producing hours of content. With two billion automated channels or“Truman shows” personalized to each user, hiring 10,000 people is inadequate to the exponential complexity – there’s no way to control it.

The 2017 genocide in Myanmar was exacerbated by unmoderated fake news with only four Burmese speakers at Facebook to monitor its 7.3M users (Reuters report)

Nigeria had 4 fact checkers in a country where 24M people were on Facebook. (BBC report)

India’s population has 22 languages in their recent election. How many engineers or

moderators at Facebook or Google know those languages?

Human downgrading is existential for global competition. Global powers that downgrade their populations will harm their economic productivity, shared truth, creativity, mental health and wellbeing the next generations – solving this issue is urgent to win the global competition for capacity.

Society faces an urgent, existential threat from parasitic tech platforms. Technology’soutpacing of human weaknesses is only getting worse– from more powerful addiction to more power deep fakes. Just as our world problems go up in complexity and urgency -- climate change, inequality, public health -- our capacities to make sense of the world and act together is going down. Unless we change course right now, this is checkmate on humanity.

WE CAN SOLVE THIS PROBLEM: Catalyzing a Transition to Humane Technology

Human downgrading is like the global climate change of culture. Like climate change it can becatastrophic. But unlike climate change, only about 1,000 people need to change what they're doing.

Because each problem – from “slot machines” hacking our lizard brains to “Deep Fakes” hacking ourtrust have to do with not protecting human instincts, if we design all systems to protect humans, we can not only avoid downgrading humans, but we can upgrade human capacity.

Giving a name to the connected systems-- the entire surface area -- of human downgrading is crucial because without it, solution creators end up working in silos and attempt to solve theproblem by playing an infinite “whack-a-mole” game.

There are three aspects to catalyzing Humane Technology:

1. Humane Social Systems. We need to get deeply sophisticated about not just technology, but human nature and the ways one impacts the other. Technologists must approachinnovation and design with an awareness of protecting of the ways we’re manipulated as

human beings. Instead of more artificial intelligence or more advanced tech, we actually just need more sophistication about what protects and heals human nature and social systems.

CHT has developed a starting point that technologists can use to explore and assess how tech affects us at the individual, relational and societal levels. (design guide.)

Phones protecting against slot machine “drip” rewards

Social networks protecting our relationships off the screen

Digital media designed to protect against DeepFakes by recognizing the

vulnerabilities in our trust

2. Humane AI, not overpowering AI. AI already has asymmetric power over human vulnerabilities, by being able to perfectly predict what will keep us watching or what can politically manipulate us. Imagine a lawyer or a priest with asymmetric power to exploit you whose business model was to sell access to perfectly exploit you to another party. We need to convert that into AI to acts in our interest by making them fiduciaries to our values – that means prohibiting advertising business models that extract from that intimate relationship.

3. Humane Regenerative Incentives, instead of Extraction. We need to stop frackingpeople’s attention. We need to develop a new set of incentives that accelerate a market competition to fix these problems. We need to create a race to the top to align our lives with our values instead to the bottom of the brain stem.

Policy and organizational incentives that guide operations of technology makers to emphasize the qualities that enliven the social fabric

We need an AI sidekick that's designed to protect the limits of human nature and be acting in our interests like a GPS for life that helps us get where we need to go.

.

The Center for Humane Technology supports the community in catalyzing this change:

Product teams at tech companies can integrate humane social systems design into products that protect human vulnerabilities and support the social fabric.

Tech gatekeepers such as Apple and Google can encourage apps to competing for our trust, not our attention, to fulfill values -- by re-shaping App Stores, business models, and the interaction between apps competing on Home Screens and Notifications.

Policymakers can protect citizens and shift incentives for tech companies.

Shareholders can demand commitments from companies to shift away from engagement-

maximizing business models that are a huge source of investor risk.

VCs can fund that transition

Entrepreneurs can build products that are sophisticated about humanity.

Journalists can shine light on the systemic problems and solutions instead of the scandals

and the grievances.

Tech workers can raise their voices around the harms of human downgrading.

Voters can demand policy from policymakers to reverse kids being downgraded.

There’s change afoot. When people start speaking up with shared language and a humane techagenda, things will change. For more information, please visit the Center for Humane Technology.”

###